I’ve been interested in computing since I was a kid. The ability to tinker with the internals of a machine, the brutalism of DOS, Windows 3.1 and Windows 95’s interfaces (those big grey buttons)… It was a great time for young persons to get started with IT, programming, and anything computer science. A great time to actually create something using a completely new medium, a digital canvas.

Today, a lot of things have changed. Many for the better, as you could argue, but it seems to me that computing today is “just not the same” anymore. The web has come around. Everything has to be touch and haptic, 500ms animations all over the place, hiding away the complexities behind, but also the potential for discovery, learning, and true creativity.

Over the past week or so, I’ve been jotting down a list of small annoyances. Not one of these is really a deal breaker on its own, but put together, it is clear — at least from my perspective — that a lot of things have changed. And I’m not the only one that thinks so (more on that later).

On Windows and Interfaces

- I come home from a two week trip to find that my old Windows 10 laptop which is being used as a media centre machine has tripped all over itself. “Critical process died,” or something like that. Sure, “blue screens” on Windows 10 are not blue anymore and now show smileys and pretty QR codes you can scan to be redirected to a Google search with the first page being a ten minute long Youtube video with 8 minutes of intro song and fumbling around with Notepad’s font settings before presenting the quote-unquote “solution” (hey did you try running this Adware infested “cleaner”?). The strange thing is that this seems to happen more often when you keep machines unattended for a while.

- Windows 10 comes with an amazing update cycle and an approach to quality control best described as “let’s all be beta testers”. As of now, I have two laptops whose Wifi connection starts crapping out after a reasonable amount of uptime. A third laptop Wifi just softlocks after a while, and will even prevent the machine from restarting normally.

- That last machine still hasn’t received its “creators update,” by the way, presumably because it has some Bluetooth chip Microsoft just can’t figure out. Strange, as it has been working fine with the current — what is it, anniversary update? — builds. Did the folks in Redmond suddenly decide to completely overhaul the driver model in the creators update? It turns out that a manual initiate of the update doesn’t cause any issues, but doesn’t this defeat the purpose of automatic updates, then?

- Speaking of updates, I’ve had it happen more than once that Windows decided to update in the middle of the night (fine) while I still have an unsaved document or presentation open (not fine), which it’ll then forcibly close to do its thing. Come on. Even if you agree with the aggressive update style (which I can understand from a certain perspective), at least cancel a restart and present the user with a warning message when they wake up. Word… PowerPoint, these are your products, Microsoft. Or at least force the documents to save somewhere, even when autosaving is turned of. How hard is it to fix this what I assume to be a very common annoyance?

- Meanwhile, Windows tries to put more and more configuration settings in their new, “flat” interface, but no worries: with a few clicks more you can still get to good ole control panel. If you’re really lucky, you might encounter some icons that are still there from the 16 bit era. It’s like putting five layers of wallpaper in your living room followed by three coats of paint (not unlike my own living room), but every time you miss a spot. Sure, I get that backwards compatibility is a thing, though still…

Interfaces in general have become horrid. An interesting discussion on Twitter has lots of people talking about this (funny that a separate website is needed to kind-of properly render out a list of tweets in a somewhat readable format):

amber-screen library computer in 1998: type in two words and hit F3. search results appear instantly. now: type in two words, wait for an AJAX popup. get a throbber for five seconds. oops you pressed a key, your results are erased

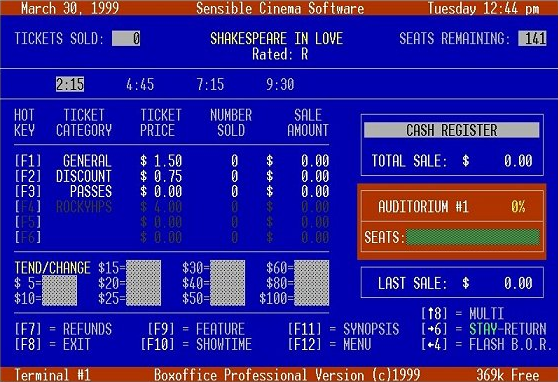

A lot of this comes from web-design-creep, but a lot of other things are to blame as well. In a way, I miss ugly, brutalist but completely focused and clear interfaces like this:

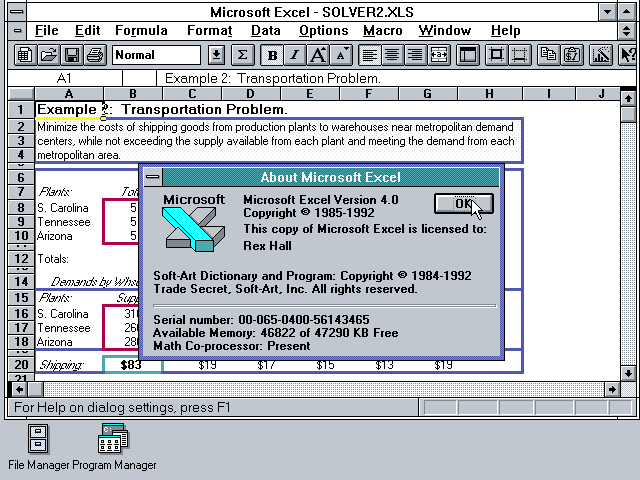

Keyboard shortcuts only, true, though the focused field is clearly visible, with global menu items that clearly indicate where you’re going towards. Even Windows 3.1 was actually very powerful in this sense:

Buttons are buttons. Simple as that. Look at their crisp outline and how they pop off the screen. Everything about this screams “you can click me and I will do something”.

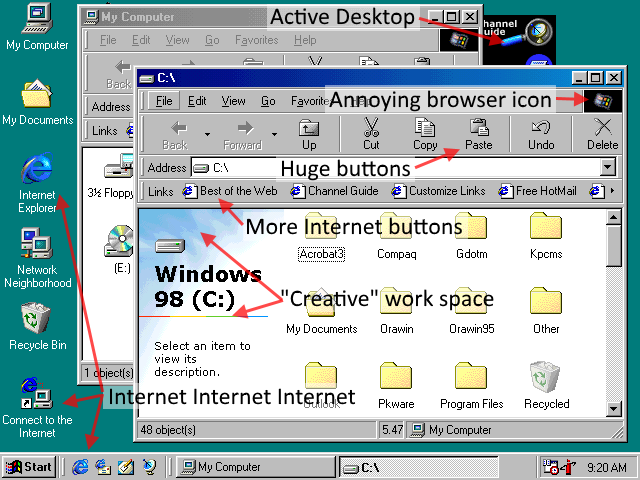

This went fine until Windows 98 came along, where you see the first evidence of the web’s tentacles getting inside the OS:

What is this? Why does there need to be a cloud in my explorer window? Just because we can? To show that we can render 256 colors now. Buttons have become larger, though for what? Meanwhile, Active Desktop was a thing for a while that polluted the desktop with Internet page widgets. Even Vista tried to do this again with gadgets or whatever it was called. So many distractions, so many design inconsistencies.

But at least, we lived in exciting new times then.

Today, we’re surrounded with boring, flat interfaces that sacrifice usability in order to looks a clean, neutral, and uninspired as possible, and where any piece of text on the screen can turn out to be a “link” that can be clicked. Good luck, dear user! In a way, Windows 3.1 manages to look quite pretty and clear in written text, as https://hackernoon.com/win3mu-part-1-why-im-writing-a-16-bit-windows-emulator-2eae946c935d, written by a chap who’s working on Windows 3.1, shows. I’d even tip my hat to Dropbox for going with some fresh design ideas instead of the bog standard translucency-animations-whitespace combo. Speaking of whitespace: try switching your Gmail display mode from “comfortable” to “condensed”. The first certainly does not make me “comfortable”.

It’s time that we start to rething interfaces. I find it strange to be looking at projects such as Lanterna or blessed-contrib and go “wow, that looks neat!” I’m not calling for a return to 80x25 CP427 or 3270, especially not after having worked with those in a mainframe environment, though there is a certain “once you get to know it, it actually works fantastic” vibe present in those, a sentiment which I’m sure is shared by e.g. vim users as well.

On Devices, Apple and Linux

- I don’t want to single out Windows… Switch to the Apple ecosystem, you say? Overpriced boxes of underperforming hardware? Having to carry around six dongles is not “courage”. Neither is a touch bar, a unibrow, an exploding iPhone 8, an iOS 11 full of bugs (what is this, even), an App Store which can only be used after entering payment details, or not being able to install VPN apps whilst living in a nation which censors the Internet. (Edit: lest alone talking about the recent Unicode rendering bug.)

- Not that Android is that much better, especially not every time I encounter an app that wants to make phone calls, send text messages, upload all of my data, access the mic and “draw over other apps.” All of this, just to check in for a flight?

- By the way, go to the developer options in Android and disable all animations for a minute. See how that feels? Amazing. I didn’t know my phone was so fast. (As an even more drastic alternative, try switching to a monochrome color scheme during the day… it’s actually quite refreshing.)

- Luckily there’s still Linux, our freedom-beacon in a sea of oppression, right? That is if you’re willing to put up with the hellspawn that is systemd.

- Also: good luck using your Linux laptop to give a presentation at some corp where they’re using some FuYouDianNoa brand of projectors which only work in some kind of devil’s 888x666 resolution. At least Windows has this part figured out. On Linux, you can choose between two options. First, you can disable your laptop screen and awkwardly turn your head 180 degrees around like you’re doing an owl imitation to see what’s on the projected screen whilst pressing down the ALT key and trying to drag-and-drop all those Windows which are still stuck in “dark screen land”. Of course, once you close your laptop, disconnect the HDMI cable and scramble to run out, you’ll have to restart the machine nine out of ten times later on because you forgot to reset the screen settings and Linux has ignored the fact that you’re back in your hotel room with no projector attached anymore. The second option involves duplicates your screen, but since laptop makers — in their infinite wisdom — think that 3200xWhatever screens on 15” displays were a good idea and projector makers agree on a solid 4:3, good luck seeing the left and right sides of the screen on your laptop — or on the projector, depending on the moon’s phase. Sure, xrandr can be set up in such a way that you have the perfect setup, complete with scaling going on, just like Windows, but be prepared to arrive half an hour upfront and apologize a lot to the person responsible to make sure “the screen works” (“normally our speakers don’t have any problem connecting”). No one can be bothered to come up with a proper GUI for this stuff, most likely because making “mobile first desktop environments” is cooler.

- And then there’s still PulseAudio, Wayland, Mir… all great technology, each with their own little traps to avoid. Meanwhile, it’s still trivial to write an X11 keylogger, copy-pasting still works in various, unexpected ways, and I’d never use Linux for a live audio show as you never know when the kernel decides that “sound is just not a priority for me right now, let’s start swapping”.

- But at least your machine will be “free and open”, right? Sure, except for this black box of microprocessor code that’s running inside your modern CPU. Intel’s Management Engine is a great example. To quote the EFF: “Since 2008, most of Intel’s chipsets have contained a tiny homunculus computer called the Management Engine (ME). The ME is a largely undocumented master controller for your CPU: it works with system firmware during boot and has direct access to system memory, the screen, keyboard, and network.” I’m laughing my back off every time I hear a big corporation talk about the need to disallow non-vetted software, take away admin rights and to lock down machines as well as possible. If it makes you feel better, I guess. (Edit: so when I wrote this, this was mere months before Spectre and Meltdown… Jesus!)

- No, to understand all of this and to be fully secure, you need to have studied music.

I’m not done yet.

- All these “smart” tablets, phones, fridges and whatnot need to update a bajillion apps if you don’t use them for a few weeks and start to slow down after every OS update. If you’re lucky, you might even have a device which “smartly” sends your whole contact book to some state actor or “revenue partner”. Wireshark your own network and see in real-time how your personal data gets sends out.

- Luckily I’m virtually immune to the addicting lure of Facebook and friends, though I can’t help but feel bad about the effect they have on others. A “show of hands” of my 1st year students indicates that many consider themselves addicted to social media. Begging for likes on Instagram, taking selfies, and close-ups of food. Some of them even admit to sleeping with their phone under their pillow. Being connected 24/7 to the world might be a good thing, but having a normal conversation from time to time is nice as well. Isn’t it time we start teaching this to our next “digital generation?”

On Gaming

- So you might wish to unwind with a game to forget all of the above. I’m asked to try the “Xbox Insider Hub” to beta test some game which crashes with some sort of “bad HTTP request” message. Perfect.

- As if we didn’t have enough “stores” already. Windows Store, Steam, GOG Galaxy, UPlay, Origin, Rockstar Social Club. Sure, I have enough CPU cycles to burn. Never before has my scrollbar in my task manager process list been so long. What is all of this stuff doing?

- The same goes for “sync” apps, by the way. Dropbox, OneDrive, Google Drive is Now Called Backup and Sync from Google but-still-ignores-syncing-some-random-files (a bug I can’t for the life of me not figure out how this is not a top priority to fix).

- If you do finally manage to get into a game, be prepared to cough up money for microtransactions, “cosmetic unlockables”, “loot boxes”, (edit: and now: “live services”) and all those other gamblind-inspired horrids that have come straight from the seventh hell that is mobile gaming to infest the rest of the gaming world. It’s a good sign that game companies are hiring such great persona’s as “behavioral economists” and “value-adding psychologists”, for sure. Just another five bucks here, another stolen credit card from mommy and daddy there… The wheel of fortune spins on. Will I reach this great new weapon? Ah no damn it, just passed it and ended up with another “Boring Regular Glock 201”. Well, I almost had it, right? No, you’re being played. Maybe spend another diamond to finish this building in five seconds instead of the normal two days waiting time for that game you’re playing on your phone?

On Programming

But at least programming is still a blast?

- Sure, compared to earlier times, we now live in a time where you can choose between three hundred different JavaScript frameworks, preferably one encumbered by patents, to ship apps with a whole web browser (preferably an outdated one to maximize the security risk) included just so your 100MB app can show an ugly drop down list which looks even more out of place than the rest of your OS’ widgets. Time for another redesign, I guess, perhaps now using Material Design?

- But no worries, we’ll just wrap it into a Docker container or this other new fancy “IOT-ready virtualization solution we’ve just found”, which totes takes care of all problems for us automagically. Did you also know this makes us 100% reproducable. Because all Dockerfiles containing an apt-get-from-some-repo-which-might-be-up-or-not is totally reproducible, our devops team said so.

- In the same vein, you can use the same “modern stack” to write reactive apps where we can pretend HTTP never happened and the best way to deal with the DOM is to re-engineer everything from the Windows 3.1 times, just so this animation can run at a “native 60 FPS”.

- Good luck setting all of this up on some VPS box these days. Dear God, the number of hoops you need to jump through just to get an e-mail server running! What the hell is SPF and DKIM keys? Why is Gmail ignored mails from my domain. Oh I get it, I should just cough up the five bucks per month to get Google Apps for Work set up.

- At least we don’t need to learn SQL any more and can just dump our unstructured data vomit into a NoSQL data lake with a Hadoop Hive Impala stack on top which is totally web scale and will allow us to do analytics (read: putting pie charts in reports) even more wrong even faster. Forget about SQL engines and just dump everything into some webscale-ready JSON store because our 10GB worth of data is now “big”. Oh, what’s that, people are coming back from that? What’d ya know?

- Luckily, at least our kids have access to great and powerful devices now, giving everyone the opportunity to get started with programming — oh wait. Take the iPad, a great content consumption machine with absolutely no quick way to program anything yourself. Yes, “10 PRINT “HELLO” 20 GOTO 10” was not the best way to teach fundamentals, but at least it was literally one key stroke away and got people interested.

On AI

Oh, and speaking of data vomit.

- Everyone has gone AI crazy. Again. This is my field of research so I’m probably part of the problem. Hadoop all the things, Spark everything, put an extra Kafka there. That’s so 2015, didn’t you realize. Now we need that deep learning and them chatbots for those quick wins and new revenue streams. Does IBM Watson have those yet? Turns out that 90% of all chatbot solutions are just regex, preferably with a diagram drawing GUI so you can hire three new people as “chatbot modelers”, but good luck explaining that to your exec or “chief technology officer”. If you’re lucky you might find some knowledge systems related stuff with forward inference. Perhaps even some Prolog running inside. The 80’s called.

- Sure, some things are impressive: Tensorflow, PyTorch, all that deep learning. I’m still waiting for a self driving car to explain post-crash why it made a mistake. But who needs explanations when faced with an all-knowning algorithm? And let’s not even go into detail regarding all the issues around bias we’re feeding into our black box predictive models. It’s self learning, didn’t you know? We removed nasty features like gender and sexual orientation and race, so we’re totally compliant. Right, until your fancy model figures out all those correlations that have been encrested in years of your data repositories to draw the same conclusions anyway. But as long as the predictions are correct, we don’t need to care about deeper societal issues, right?

Back to the Web

The web is dying as well. Does anyone remember websites words it perfectly:

Does anyone remember websites? These might be unfamiliar to anyone unexposed to the internet before 2005 or so, and may be all-but-forgotten for many others, obscured by the last 10 years of relentless internet development, but before mass social media platforms and amazing business opportunities on the internet, it was largely a collection of websites made by people who were interested in some subject enough to write about it and put it online. Does anyone remember when you stumbled on a new website written by some guy and read his first article, then clicked back to his homepage and saw he had a list of similar articles that looked like they’d be just as interesting.

That was great. The web was fun back then, a treasure trove of badly formatted but interesting or fun, plain pages. A lot of things I’ve used and am still using today in my research (from AI to evolutionary algorithms to cellular automata) I’ve learned from pages like this (still remember days reading http://www.ai-junkie.com/ann/som/som1.html when I was about 16 years old!).

Others have said this as well.

I’ve been watching some nostalgia tech channels on YouTube recently. Things like Lazy Game Reviews, DF Retro, and The 8-bit Guy. Seeing these machines of old not only reminds us (or me, at least) of how far we’ve come, but mainly how simple, honest, “straight-to-the-metal” these things were.

I kind of miss that feeling.

What has happened with “computing” as of today? Perhaps — most likely — all of this is due to me getting older, more busier, less easily impressed, or simply a case of nostalgia goggles firmly stuck on my face, but I can’t shake the feeling that computing today has lost a lot of its appeal, its “magic”, if you will, compared to the days of old.

Today, we have an unfinished OS running on twenty devices, DRM schemes crippling our computing freedom, interfaces that are confusing and buggy, a web controlled by a few companies which are corrupt and manipulative, and full of Russian ads and propaganda spreaders and youth that is addicted to social media.

I used to laugh (silently) at “dinosaurs” I’d meet telling me about how all of this new stuff already existed in the eighties. I still laugh (silently), as I can still gleem in the fact that I at least try to keep up to date with all these new developments whereas they have given up and perhaps just want to emphasize their relevancy, but I’m feeling increasingly more sour as I start to wonder towards where, exactly, we’re trying to “evolve” to.